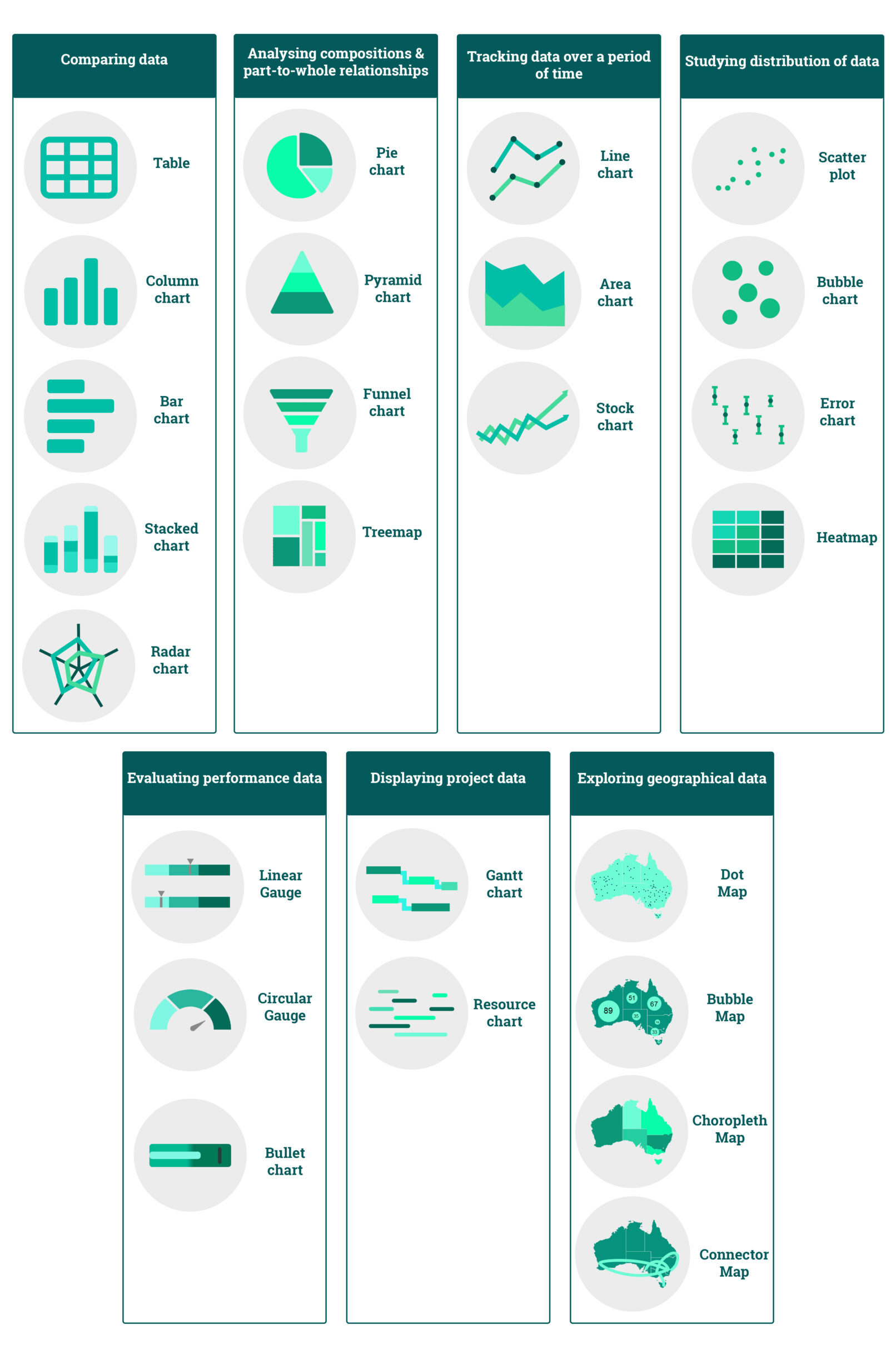

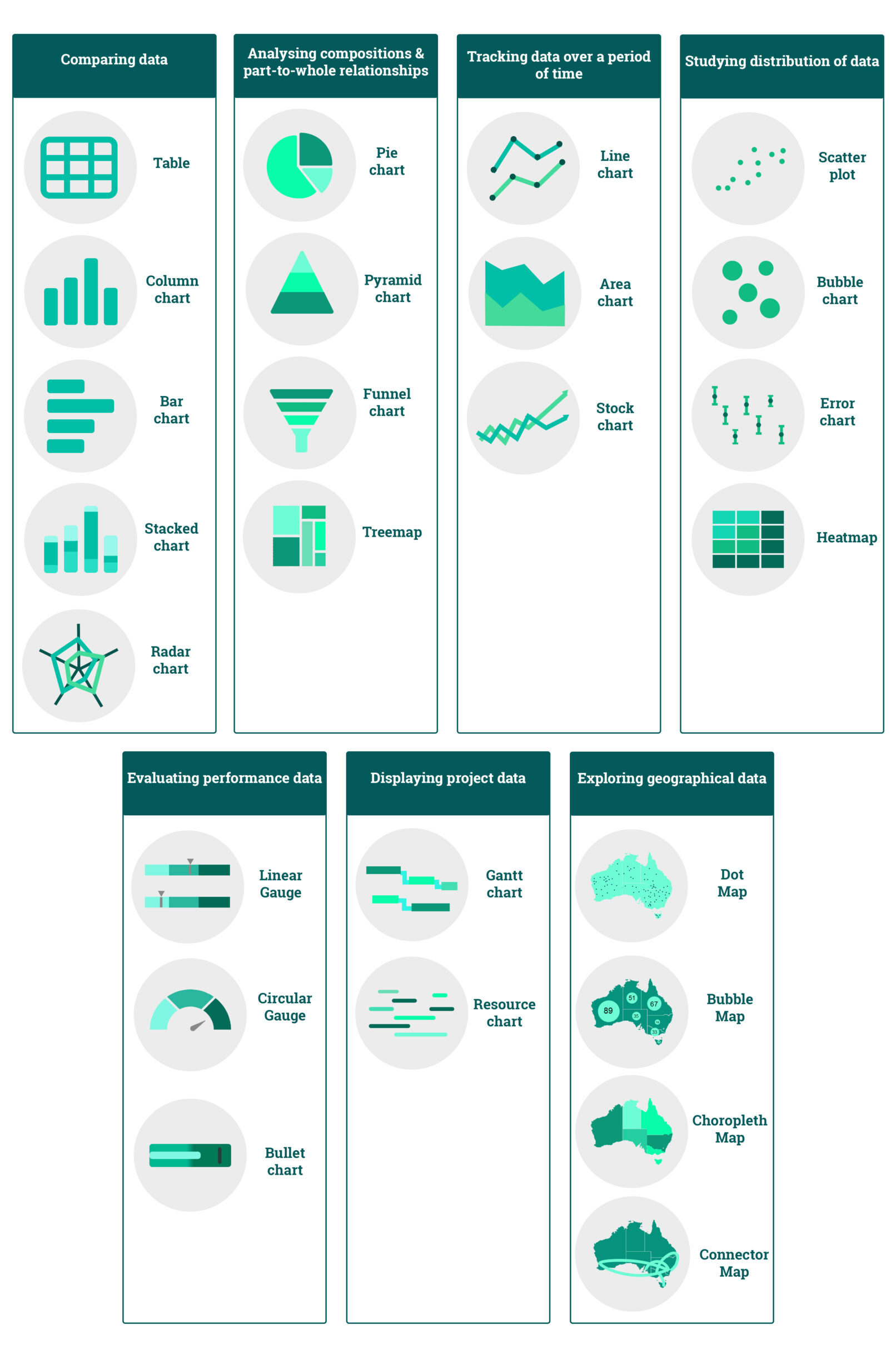

Cheatsheet for Charts: Pair the Right Chart with the Right Set of Data

To ensure your message conveyed through your data is clear and correct, you need to use the right charts to visualize it.

Dec 11, 2020 | Article

Today’s global enterprises have their exponentially increasing amount of data deployed in various complex combinations of on-premises and cloud environments. So, the complexity of managing and, more significantly, mining this data is a primary challenge for almost all organizations. A data fabric is the standard solution to these issues as it creates an exceedingly flexible data management environment that spontaneously adapts to changing technology.

What is a Data Fabric?

Data fabric represents a single environment consisting of a combination of architecture and technology designed to ease the complications of managing dynamic, distributed, and diverse data. According to Ted Dunning, a data fabric enables you to run the right applications at the right time in the right place and against the right data. It helps organizations simplify data management and speed up the IT delivery process by eliminating point-to-point integration.

Data Fabric Components

To obtain seamless, real-time integration of distributed data, a data fabric needs many components, including the following:

1. Data Ingestion

It needs to work with all potential data formats, both structured and unstructured, from various sources, including data streams, applications, cloud sources, and databases. Also, it must support batch, real-time, and stream processing.

2. Data Processing

It provides you with a set of tools to help you curate and transform your data to make it analytics-ready to be used by downstream BI tools.

3. Data Management and Intelligence

It allows you to secure your data and enforce governance by determining who can see what data. This is also where you can apply global structures such as Metadata Management, search, and lineage control.

4. Data Orchestration

This component coordinates the operation of all stages throughout the end-to-end data workflow. It lets you define when and how often you should run the pipelines and how you can manage the data produced by those pipelines.

5. Data Discovery

You can employ data modeling, data preparation, and data curation in this layer. It allows your analysts to find and consume the data across two “silos” as if they were part of the same dataset and extract valuable insights from it. This is probably the most important part of the data fabric as it focuses on solving the silo problem.

6. Data Access

It delivers your data to analysts directly or through queries, APIs, data services, and dashboards.

This layer often builds semantics, intelligence, and rules into data access mechanisms to provide the data in the required form and format.

To ensure your message conveyed through your data is clear and correct, you need to use the right charts to visualize it.

Whether you’re a business user newly learning SQL or a Data Analyst who is already a pro, it never hurts to keep a handy reference guide for a quick peek the next time you’re writing an SQL query!

Have you ever had trouble working with large datasets? Are you frustrated having to move large datasets from a data warehouse to the workstation that has your analytical tools? Are you still using slow ODBC/JDBC connectors? You need to consider using modern data analytics tools with in-database query capabilities.